We all use the services of professionals.

And many professionals use AI.

But as consumers, do we really want our service providers to use AI? Are we ready for this?

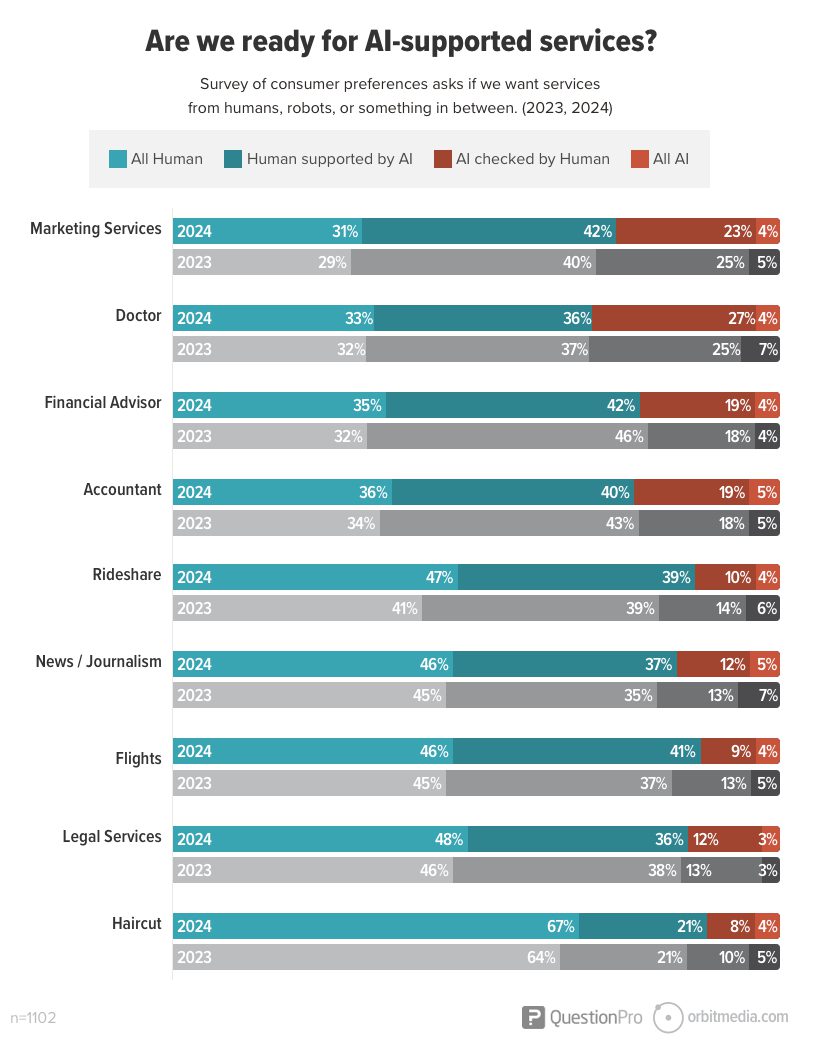

The only way to find answers to questions like this is to ask people. So we partnered with QuestionPro and asked 1,100+ consumers if they want their doctor, accountant and lawyer to use AI. The results were mixed, as you’ll soon see. But here are the big takeaways:

- For most services, consumers want AI to be involved

- We want AI involved more for some services than others

- We are a bit more skeptical of AI than a year ago

For each service in the chart below, the lighter colors indicate more trust in humans. The darker colors indicate more trust in AI. Blue is 2024 and gray is 2023.

Generally, consumers want AI to help their service providers. For every type of service in the survey (with one exception, hair stylists), the majority of respondents showed a preference for some amount of AI support. Few of us want a robot to completely replace the human professional, but most of us want AI to help.

Let’s look at how the AI-readiness of consumers varies across these nine different service categories.

This is obviously interesting from the sociological perspective (where’s our culture headed?) but because this is a marketing blog, we’ll also provide a marketing perspective (is it time to put AI into our marketing messages?)

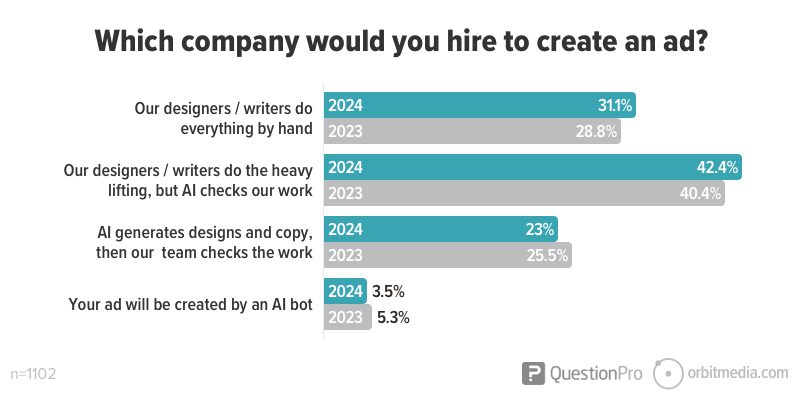

You’re hiring a marketing company to create an advertisement. Which company sounds best to you?

We start with creative services. Do businesses want their marketers to use AI to draft copy and create visuals? It seems that the answer is yes, more than the other categories. 69% of respondents want their creative agency to get help from AI.

Trust in AI-powered creative services seems to have declined a bit since last year. But the difference is within the 3% margin of error for the survey.

There are a lot of AI applications for creative firms: audience research, competitive analysis, draft copywriting, editing, generating design concepts and visual assets, reporting and analytics. The list goes on and on.

Ultimately, marketing is a test of empathy, so I disagree with the 5% of respondents who would like to replace creatives with robots.

- 31% Our designers/writers do everything by hand

- 42% Our designers/writers do the heavy lifting, but we check our work with AI

- 23% We start with AI-generated designs and copy, then our team checks the work

- 4% Your ad will be created by an AI bot

We asked social media agency founder, Chris Madden, for his thoughts on embracing AI.

|

Chris Madden, Matchnode“Our team at Matchnode has consistently embraced technological advancements, with strategic growth and lifelong learning among our core values. Thus, we experiment with AI tools, looking for productivity boosts (and potential disruptions to our business) on a regular basis. To some extent, an individual’s or a company’s mindset towards AI in marketing services serves as a revealing Rorschach test, reflecting their confidence, adaptability, and capacity to act in uncertain times. The genie is out of the bottle. The creative explosions, sheer human delight, and efficiency gains provided by Midjourney, ChatGPT, AutoGPTs, and the forthcoming personal AI agents are truly awe-inspiring.” |

What would a marketing message promoting an AI-powered agency look like? “Our team uses AI to better understand your audience and to do rapid prototyping of design mockups and draft copy, which our human experts tune up by hand with loving care and attention.”

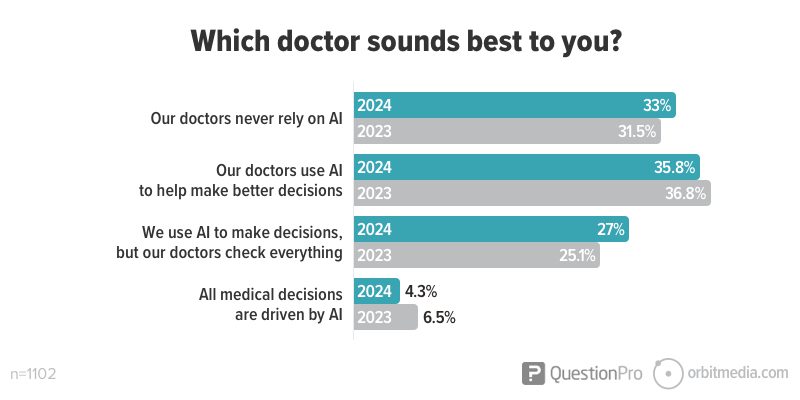

You’re looking for a new doctor. Which option sounds best to you?

We’re answering the question: Are we ready to have AI involved in our healthcare?

AI is already powering some medical decisions but we’re not really seeing AI in healthcare marketing messaging yet. I have yet to see a healthcare ad promising to have AI look over their doctors’ shoulders. To the contrary, healthcare marketing usually emphasizes a human, personal angle.

The results show that the majority of respondents would like AI involved in their healthcare decisions. Only one third of respondents want human-only healthcare.

Surprising that 4% are ready for a robot doctor. This number went down since last year.

- 33% Our doctors never rely on AI

- 36% Our doctors use AI to help make better decisions

- 27% We use AI to make decisions, but our doctors check everything

- 4% All medical decisions are driven by AI

|

Ahava Leibtag, Aha Media“Physicians incorporate AI into their daily practices, much like their traditional tools—scalpels and stethoscopes—but don’t depend solely on them. AI lacks the capacity for critical analysis and empathy, crucial for finding solutions that minimize patient harm. While AI is a useful tool, we continue to champion the human element in healthcare—the compassionate and analytical minds behind the technology that shapes patient outcomes. In healthcare, combating misinformation remains our biggest challenge. Clarity in our content is more important than ever. When talking about AI in healthcare, we have to separate its applications. The difference between a chatbot gauging symptoms and a doctor’s seasoned diagnosis is stark. And with AI now sifting through data to suggest outcomes for clinical trials, the stakes are higher. But our focus is on content. As Google and other search engines start to integrate AI-generated answers into health queries, our commitment to fact-checking every detail intensifies. Misinformation breeds distrust. When a hospital logo appears next to an AI-generated search result, people will trust it. Until it’s wrong. We can’t let healthcare communication become the sole province of machines.” |

What would a marketing message promoting an AI-supported doctor’s office look like? “Our team of doctors use the world’s largest medical datasets, using AI to confirm that every diagnosis is as accurately as humanly possible.”

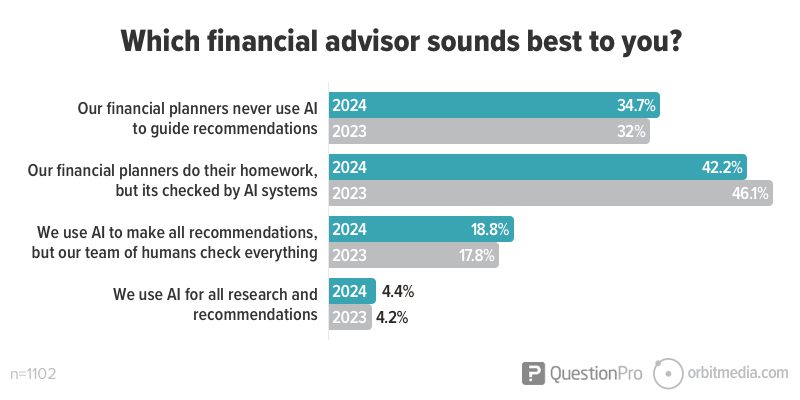

You’re considering using a new financial advisor. Which option sounds best to you?

Millions of US consumers already use apps for investing. Some of these apps use algorithms to recommend investment allocation. Some of those apps use AI. So there is already wide adoption of algorithm-driven financial advice. But our question here assumes that you’re looking for a human advisor, so the answers are in that context.

Consumers are very interested in having AI involved in their finances. Two thirds of consumers want AI involved in their retirement planning. Trust in AI appears to have slipped a bit since last year.

- 35% Our financial planners never use AI to guide recommendations

- 42% Our financial planners do their homework, but it’s checked by AI systems

- 19% We use AI to make all recommendations, but our team of human check everything

- 4% We use AI for all research and recommendations

This is a category that has serious security requirements, so using publicly available tools, trained on large language models isn’t always an option. Financial marketing pro, Stacy Klein, explains.

|

Stacy Klein, VP of Marketing, Wintrust Financial“In highly regulated industries like finance, some content marketers don’t have online work access to non-proprietary tools like ChatGPT due to risk. But they can continue to rely on SEO and search to provide content context–that reliance on that part of AI is not going away (until it does). These survey results show that the goal for marketers in this field stays the same: The value of the human relationship between a consumer and their financial expert is the most crucial point to make.” |

So what might a marketing message promoting an AI-powered financial planner look like? “Our financial models combine AI and our proprietary datasets, producing insights that are unavailable to investors who simply rely on public data.”

…a little wordy, but you get the idea.

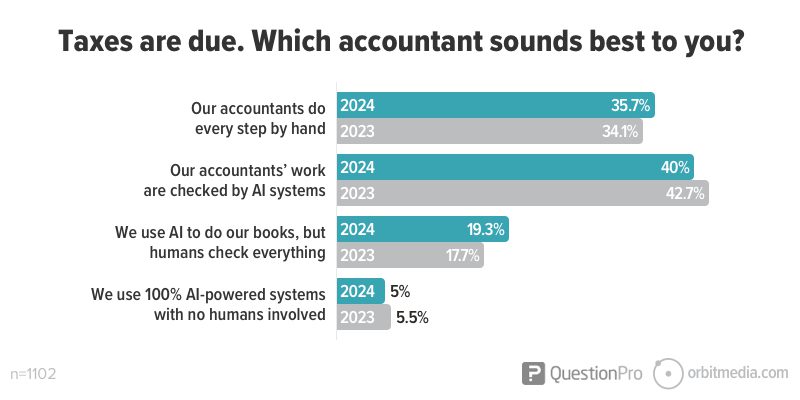

You’re considering using a new accountant. Which option sounds best to you?

Another money question with similar responses. Maybe because accounting is a matter of accuracy and compliance, we trust professionals who adopt technology. Technology correlates with competence.

- 1970’s You want an accountant who uses a calculator

- 1980’s You want an accountant who uses a computer

- 2000’s You want an accountant who uses your software

- 2020’s You want an accountant who uses AI

- 2030’s You want an AI accountant…

This is another category where consumers are very interested in AI. Almost two thirds of consumers want their accountants to get help from AI. This is slightly lower than last year, but still within the margin of error.

- 36% Our accounts do every step by hand

- 40% Our accountants’ work is checked by AI systems

- 19% We use AI to do your books, but humans check everything

- 5% We use 100% AI-powered systems with no humans involved

This may be a category where AI marketing messages begin to appear first. Here’s what it might sound like. “Our AIs are trained in-house on decades of financial records, checked by certified human experts, ensuring accurate and efficient returns.”

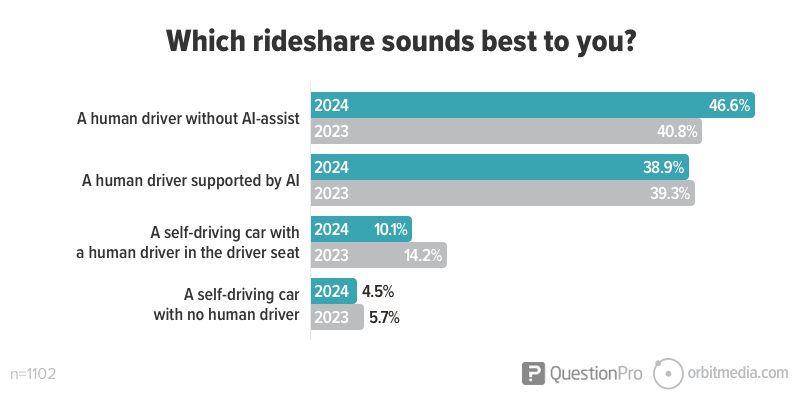

You’re using a rideshare app to call a car. Which option do you prefer?

Rideshare passengers are greeted with a human hello. That’s nice. But we all know that the convergence between apps and self-driving cars is coming. Are we ready for it? Not yet.

95% of respondents want a human in the driver’s seat. And more than last year, people want a driver with their hands on the wheel, watching the road.

What about safety? We’ve all seen headlines about self-driving cars involved in fatal accidents. There have been several. Never mind that human error causes 42,000 car-related deaths per year in the US alone, most of which were caused by human error. Self driving cars are safer. This is a case study in how stories are more persuasive than data.

- 47% A human driver without AI-assist

- 40% A human driver supported by AI

- 10% A self-driving car with a human driver in the driver seat

- 5% A self-driving car with no human driver

But safety and price aren’t the only factors. It’s nice to chat with your Lyft driver. Hear what music they’re listening to. It feels good to tip. But marketing for self-driving ride-share cars will likely come down to price.

“…or save 92% with a self-driving car.”

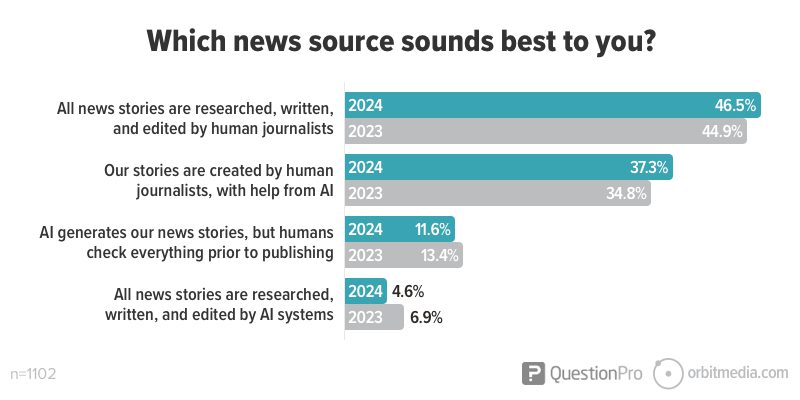

You’re considering subscribing to a new source of news. Which of these options sound best to you?

We’ve all used apps that aggregate news stories. But the articles themselves are written by human journalists, and vetted by human editors. Are we ready to have robots choose the topics and write the stories?

Apparently, yes. 16% of respondents want AI to write their news. This has also declined since last year.

- 47% All news stories are researched, written and edited by human journalists

- 37% Our stories are created by human journalists, with help from AI

- 12% AI generates our news stories, but humans check everything prior to publishing

- 5% All news stories are researched, written and edited by AI systems

This one I really don’t understand. News media is critical to democracy. I just don’t trust algorithms that much. News rooms have already been hollowed due to tech-driven disruption. If news readers don’t care, the trend will continue.

In 2023, we asked news media strategist Charlie Meyerson for his thoughts.

|

Charlie Meyerson, Chicago Public Square“I’m torn on this, for personal and professional reasons. I so enjoy the writing and reporting process that I can’t get fully excited about technology that would mean fewer such opportunities for humans. And yet I must confess that my own writing—for my email news briefing, Chicago Public Square (voted Best Blog by Chicago Reader readers!)— is made better daily by artificial-intelligence-driven tools like Grammarly, which catches typos and grammatical errors galore. And, in fact, as Politico’s Jack Shafer has noted, ‘technology has been pilfering newsroom jobs for more than a century’—from cameras that obsoleted illustrators; to digital composition that displaced typesetters; to software that gutted editing slots; to the internet, which threatens the livelihoods of press workers, delivery folks and newspaper librarians; to transcription bots that have displaced stenographers. Why should reporters be any different?’ I can attest from personal experience over the decades that some of the best reporters aren’t great writers. If AI can help people with a nose for news—but not a great grasp of English, some because it wasn’t their first language—write better under deadline, I call that a win. P.S., April 2024: I should add that my particular journalistic passion over the years—rounding up the news, in the form of newscasts, website front pages and email newsletters—is a practice AI could subsume. As media watcher Mark Stenberg posits, “the only kind of news coverage that has any degree of insulation from the coming AI revolution is information that exists nowhere else in the world, information that you have brought together through reporting.” |

What’s the pitch of an AI-driven news source? “Our AI crawlers read 1M+ articles per day, then summarize it all into the three stories that are most important to you.”

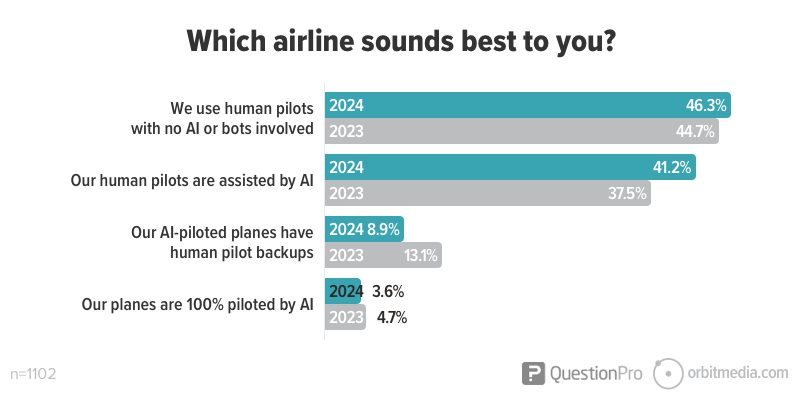

You’re buying a plane ticket. The airline offers several options. Which sounds best to you?

In this case, consumers are lagging behind the tech trends. 46% of respondents said they’d like a human pilot to fly without any help from automation, but that’s not how commercial airlines work.

In reality, except for takeoffs and landings, autopilot is flying the plane. This frees the pilot to do other important things, such as track weather, monitor the fuel system, follow the clearances from Air Traffic Control, etc.

- 46% We use human pilots with no AI or bots involved

- 41% Our human pilots are assisted by AI

- 9% Our AI-piloted planes have human pilot backups

- 4% Our plans are 100% piloted by AI

Again, we see a slight decline in the trust in technology. Because consumers aren’t ready for it, don’t expect to see AI in the marketing messages of airlines anytime soon.

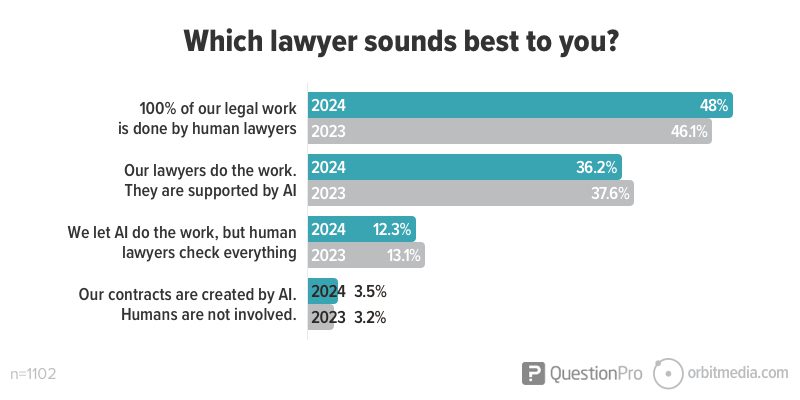

You’re hiring a lawyer to help with a contract. Which law firm sounds best to you?

Lawyers are word merchants. They’re definitely impacted by AI. An AI-powered law firm should be more efficient and more thorough. But do consumers even want their attorneys to use AI? It’s split.

About half of the respondents would like their lawyers to get help from AI. If you’re paying by the hour, anything that saves time is a good thing, right?

- 48% 100% of our legal work is done by human lawyers.

- 36% Our lawyers do the work. They are supported by AI.

- 12% We let AI do the work, but human lawyers check everything.

- 4% Our contracts are created by AI. Humans are not involved.

This is a category where AI in marketing messages may appear soon. Here’s what you may soon see on a lawyer’s website: “Our AI-powered legal discovery process analyzes centuries of case law in minutes, saving you time and strengthening your legal position.”

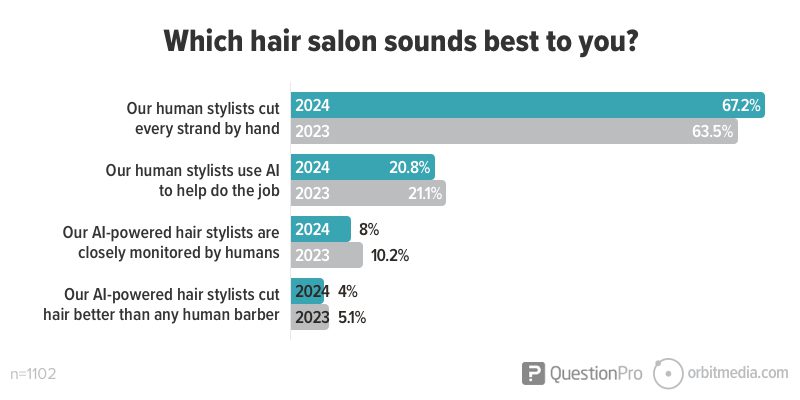

You’re considering a new hair salon. Which option sounds best to you?

Hard to imagine a BarberBot? Me too. Sounds weird. This was the only question where the majority of respondents wanted no AI involvement at all.

This question puts the other responses in context. It shows that some consumers are just very trusting of technology, even when the involvement of technology is undefined.

How could AI help you with your hair? Maybe by showing pictures of you sporting various styles based on your input. “Show me what I’d look like with full-bodied, dark hair on top, with a tight fade on the sides. No, try it a little longer. Yeah, that’s good. Let’s go with that…”

- 67% Our human stylists cut every strand by hand

- 21% Our human stylists use AI to help do the job

- 8% Our AI-powered hair stylists are closely monitored by humans

- 4% Our AI-powered hair stylists cut hair better than any human barber

Image source: “DALL-E prompt: Create an image of a robot hipster barber cutting the hair of a happy trusting person. 16:9”

Image source: “DALL-E prompt: Create an image of a robot hipster barber cutting the hair of a happy trusting person. 16:9”

Who trusts AI?

We looked for correlations between demographics (age, race and gender) and trust in AI and found only weak correlations. Here are the strongest correlations we found:

- Younger people are more trusting of AI-powered rideshare services (0.10)

- Older folks were slightly more likely to trust AI-supported healthcare (0.02)

- Women were a bit less trusting of AI across the board (-0.06) and especially distrustful of AI-supported accounting services (-0.10)

People across the spectrums of race, age and gender seem to share similar levels of trust in AI-supported services.

A quick, practical digression: how did I find these correlations? The QuestionPro tools are excellent, but as an experiment, I decided to let ChatGPT do the analysis. I have a ChatGPT Plus account, which allows me to upload files. It was a two-prompt process, which often seems to work better.

First I gave it the big picture…

This is a survey of more than a 1000 people in the US showing the extent to which they trust AI-supported services. Summarize.

[uploaded the chart from the top of this article]

Once we had established the context, I asked it to analyze.

You are a data scientist, skilled at finding correlations in survey data. Here is the raw data for that same survey showing demographic information for the respondents. How does age, race and gender correlate with trust in AI?

[uploaded the CSV file of the raw data with the irrelevant columns and rows removed]

Scanning through the response and the data, it looked good. The bullet list above is my summary of AI’s response.

Is trust in AI declining?

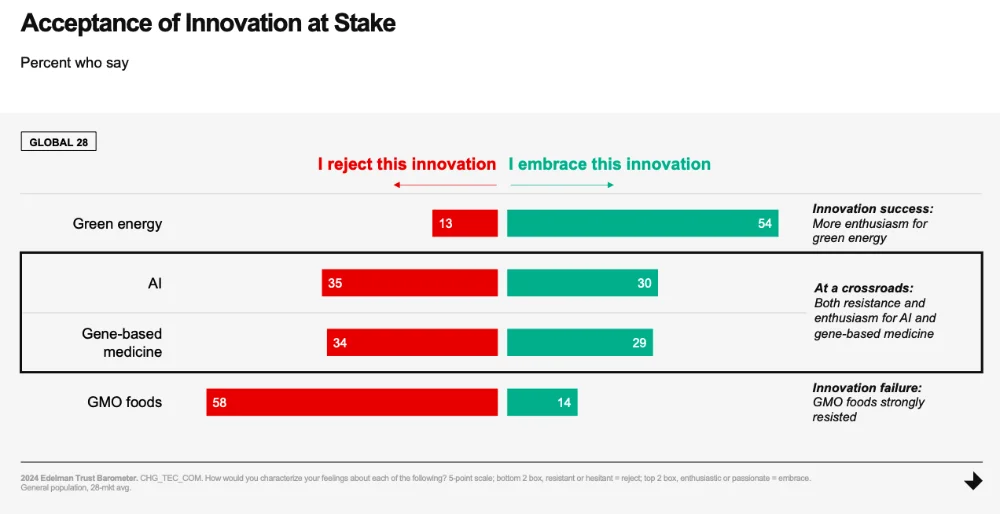

We don’t have enough data to conclude this yet. Our comparison is only one year and we surveyed only 1100 consumers in one market, the US. It showed a slight decline in our trust in AI technology. But this does with the findings of a much larger survey, the Edelman Trust Barometer. They surveyed 32,000 people in 23 countries. Take a look:

In this dataset, 35% of respondents reject AI innovation. 30% embrace AI innovation. They conclude that AI is at a crossroads, where there is both resistance and enthusiasm for advancement.

What drives trust in AI? There are many factors. High-profile headlines about AI errors, fears about labor market impact, general distrust in big tech and big tech leaders, and of course, our own human bias.

AI technology has been pervasive for years within systems all around us. But now that generative AI is accessible to all of us, we are now deciding on how much we trust it. To what extent will we embrace it? Likely, we will be divided.

Research Methodology

Huge thanks to our research partner, QuestionPro, for collaborating with us on this quick project. The tool is very easy to use and responses came together quickly.

Who responded to the survey?

There were 1,075 respondents to the survey, gathered on April 18, 2023 from a broad section of the general population. The second dataset was from a survey of 1102 respondents, gathered on April 5, 2024.

Here is a bit about the demographics for the newer dataset:

- Age: 12% were 18-24, 18% were 25-34, 20% were 35-44, 17% were 45-54

- Gender: 63% female, 37% male

- Race or ethnicity: 67% identified as White, 17% identified as black or African American, 8% identified as Hispanic or Latino

Our dataset is close to being representative of the US population, but it does skew a bit younger, more female.

How did we select these service categories?

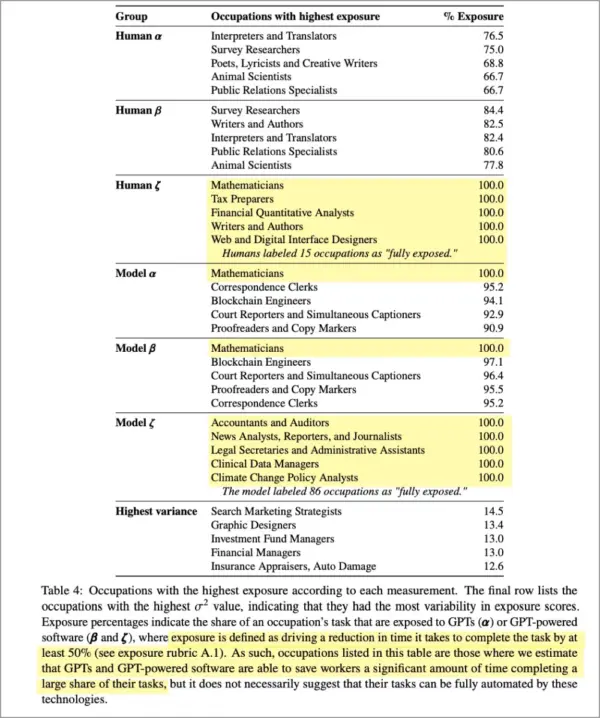

We referred to Open.ai’s 2023 research on the labor market impact of AI, and selected the occupations with the highest exposure to impact from AI, according to their various data models.

“Exposure” is defined as AI tools driving a reduction in time it takes to complete a task by at least 50%. So these are the service categories where AI impact is most likely. We added the hairstylist category, partly for comedy and partly for reference, putting the other data in context.

Here is the most relevant section of their research, with the professions with an estimated 100% exposure highlighted: